Inside Whisker Haven: How I Built an AI-Powered Cat Adoption Chatbot with RAG & GPT-3.5

June 26, 2025

I built WhiskerBot, an AI chatbot for pet adoption, using a RAG pipeline combining vector search with GPT-3.5. This blog breaks down the architecture, intent detection, query parsing, semantic filtering, and multilingual AI response generation. Fully open-source, powered by OpenAI + MongoDB.

🎬 See WhiskerBot in Action

⚙️ Technical Foundation

This chatbot uses Retrieval-Augmented Generation (RAG):

- Retrieves relevant cat profiles using vector search (MongoDB Atlas + OpenAI embeddings)

- Generates personalized responses with GPT-3.5-turbo

- Ensures factually grounded yet natural conversations

What started as a fun passion project blending my love for cats with a curiosity for AI quickly evolved into a full-stack, AI-powered platform using LLMs and vector databases to drive real-world adoption workflows.

In this blog, I’ll walk you through how I built WhiskerBot, from understanding what users actually want, to using semantic search and GPT-3.5 to match the right “vibe,” and even handling those quirky, unexpected questions people love to throw at chatbots (because, let’s be honest… people are wild 🙈).

You’ll see real code snippets, insights into using OpenAI’s GPT-3.5, how I stored and searched cat profiles with MongoDB Atlas, and how the backend runs on Node.js + Express.

Let's jump right in!

1. Intent Classification: Understanding What Users Really Want

The very first step when a user types something is figuring out what they want.

Are they:

- Looking for a cat?

- Asking about adoption?

- Curious about wellness?

- Or just totally off-topic (like "Why do I crave pizza at 3 AM?" seriously, whyyyyy 😭?).

Instead of immediately processing every input as a search or action, we first pass the query through a function called classifyUserIntent. This function uses the openai.chat.completions.create method (which allows us to interact with OpenAI's GPT models (I'm using gpt-3.5-turbo in this project).

Because Whiskers Haven is focused on cat adoption, we intentionally limit the scope to keep the chatbot aligned with the purpose of the platform. The supported intents in this website are:

cat_searchadoption_questiondonationcat_wellnessInfooff_topic

Why this matters:

- Keeps our bot focused and efficient

- Avoids wasting resources on irrelevant stuff

- Manages user expectations (sorry, no pizza therapy here)

async function classifyUserIntent(query) {

const response = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: [

{

role: "system",

content: `Classify the user message as one of:

[cat_search, adoption_question, donation,

cat_wellnessInfo, off_topic]. Strictly return

only one category based on the user's intent.`,

},

{ role: "user", content: query },

],

});

return response.choices[0].message.content.trim();

}2. Parsing Natural Language Queries: Turning Words Into Filters

Okay, so once we know the user's intent is cat_search, it's time to figure out what kind of cat they're actually looking for.

Users don't say:

{ age: { $lt: 4 }, gender: "Male", good_with_children: true }They say: "I want a playful male cat who's good with kids and under 4 years old."

So our job is to turn that natural sentence into a structured query MongoDB can understand.

So... how do we do this?

Yup, OpenAI to the rescue again. We pass the user's sentence to a function called parseQuery() that uses openai.chat.completions.create() to extract relevant fields (age, gender, etc.) and captures fuzzy vibe words like "cuddly" or "calm" that don't map directly to database fields but do matter.

Example:

User query: "Looking for a calm, elderly female cat"

Parsed output:

{

"age": { "min": 8 },

"gender": "Female",

"semantic_terms": "calm"

}💡 Pro Tip:

Please set temperature to ~0.1. That makes the AI stick to facts instead of going creative as we want the values to directly map to fields in our DB.

async function parseQuery(naturalLanguageQuery) {

const prompt = `

### Output Fields:

{

"age": {"min": number, "max": number} or number,

"activity_level": "Low" | "Moderate" | "High",

...

"semantic_terms": "descriptive keywords or behavioral traits or breed names"

}

### Output Format:

Only return a **valid, compact JSON object**.

Query: "${naturalLanguageQuery}"

`;

const response = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: [{ role: "user", content: prompt }],

temperature: 0.1,

});

return response.choices[0].message.content.trim();

}3. Building the Smart Search Pipeline: Semantic + Exact Filtering

After parsing, you get something like:

parsedParams = {

age: { max: 3 },

gender: 'Male',

good_with_children: true,

vaccinated: true,

semantic_terms: 'cuddly'

}Notice the first few fields map directly to DB fields EASYYYY to filter. But "cuddly" is a vibe word. How do we handle that? This is where things get interesting.

Data Indexing: Preparing Our Data for Smart Search

Before we can start searching and answering user questions effectively, we first need to index our data properly. Indexing means organizing the data in a way that makes searching fast and meaningful.

In our case, the data is the collection of cat profiles : their descriptions, stories, and behavioral details. The goal is to create a searchable database that understands not just keywords but the meaning behind what users ask for.

Step 1: Vector Search – Filtering by Vibes

Imagine you're shopping online for a "chic black partywear dress in size S." You wouldn't just type in every exact word , instead, you first pick the vibe (partywear), then filter by color and size.

Similarly, when a user searches for a "cuddly" cat, they want a cat with a certain affectionate vibe even if the word "cuddly" isn't directly written in the cat's profile.

How Vector Embeddings Help Us Find the Perfect Cat

Vector embeddings helps to convert text into numerical values that represent the meaning of the text, not just the words.

For WhiskerBot:

- We combine each cat's description and story ,rich with behavioral and background info and generate an embedding vector

- These embeddings get stored in our MongoDB database

- When a user searches, their query text is also converted into an embedding vector

- We then compare the user's query vector with all the cat embeddings to find the closest matches ,cats whose descriptions "feel" like what the user wants

How We Generate Embeddings for Cats

We use OpenAI's affordable and effective text-embedding-3-small model to generate these vectors:

// Generate embedding for cat description + story text

async function generateCatEmbedding(text) {

const response = await openai.embeddings.create({

model: "text-embedding-3-small",

input: text,

});

return response.data[0].embedding;

}Building the Vector Index in MongoDB

To search embeddings quickly, MongoDB Atlas provides a vector index that efficiently compares semantic vectors.

Here's what happens:

- Each cat document in MongoDB has an embedding field storing its vector

- We create a vector index on this field (cat_embedding_index)

- When a user searches, we generate a query vector for their search term

- MongoDB uses $vectorSearch to find the nearest embeddings (cats) to the query vector

// Example of a MongoDB vector search query

$vectorSearch: {

index: "cat_embedding_index",

path: "embedding",

queryVector: queryEmbedding,

numCandidates: 200,

limit: 100,

}The Data Flow from Query to Results

- User Input: The user types a search like "cuddly cat."

- Query Embedding: The search text is converted into a vector embedding

- Vector Search: MongoDB finds cats whose embeddings are closest to this query vector

- Filter & Rank: The results are filtered and ranked for the best semantic match

- Display: WhiskerBot shows cats that match the user's vibe even if the word "cuddly" doesn't appear explicitly in their profile

Step 2: Exact MongoDB Filters

Once we have a smaller, vibe-matched group, we apply exact filters like age, gender, vaccination status.

This two-step pipeline ensures we get cats that feel right and match your practical needs.

To dive deeper into the code, check out the implementation in my GitHub repo:

📁 /services/openaiService.js

🔧 Functions: buildAtlasSearchPipeline(), buildMongoFilters()

And finally Run the pipeline using Model.aggregate(pipeline) and hurayyyy! You've just executed the most crucial step of the recommendation engine.

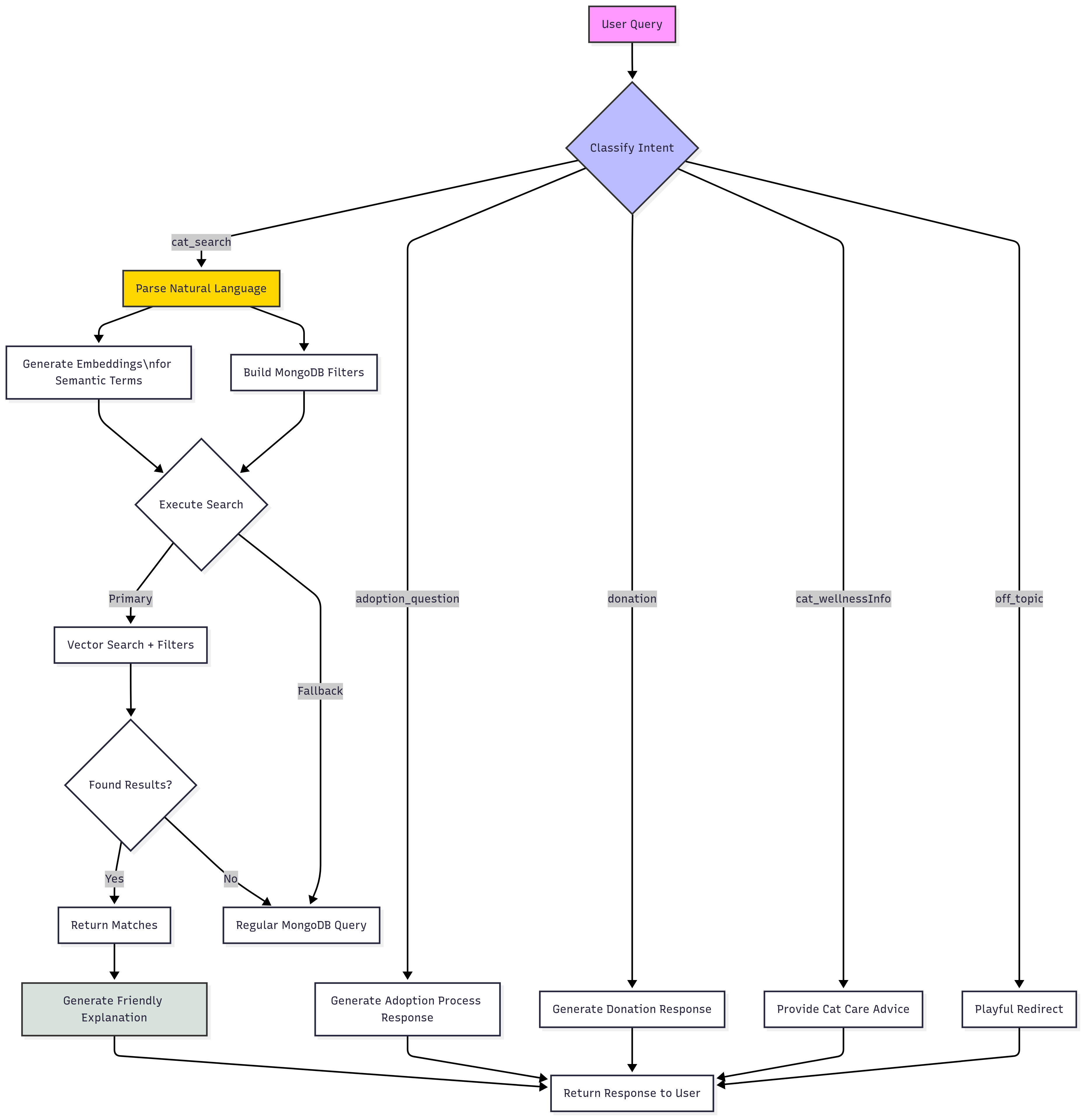

4. Architecture Diagram

Here's a visual representation of how WhiskerBot processes user queries and delivers personalized cat recommendations:

Complete workflow showing intent classification, query parsing, vector search, and response generation

This diagram illustrates the complete flow from user input to final response, showcasing how each component works together to deliver accurate and personalized results.

5. Multilingual Prompt Engineering for Cat Match Explanations

Once the RAG pipeline returns cat profiles, raw JSON isn't enough, users connect better with personalized narratives.

We feed both the user's query and search results into generateSearchExplanation(), which uses openai.chat.completions.create() to generate custom, emotionally resonant explanations for why each cat was selected.

It even supports multilingual output, just pass an ISO code like en or tr, and GPT will respond in the appropriate language.

Prompt tuning tips:

- Temperature: ~0.8 for creative yet informative replies

- Max tokens: ~400 for concise explanations

- Prompt structure: Clear tone and list formatting yield best results

Example prompt template:

GENERATE:

ISO LANGUAGE CODE: ${language}

STRICTLY respond in ${language} using:

1. Opening sentence referencing user query

2. Numbered list of 3 matching cats:

- **Name**, age, breed

- 1-2 lines on why they match🔍 Curious how it works under the hood? Explore these core functions:

generateSearchExplanationbuildSearchPromptgenerateAIResponse

5. Handling Other User Intents

Not every question is about finding a cat.

For adoption info, donations, or wellness tips, the flow is simpler but follows the same pattern: Call OpenAI's chat completions with tailored prompts to generate friendly, helpful answers.

Example: adoption process explanation:

async function generateAdoptionResponse(query, language = "en") {

const response = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: [

{

role: "system",

content: `

ISO CODE LANGUAGE: ${language}

STRICTLY reply in ${language}

Generate a friendly message explaining 6 steps of the adoption process.`,

},

{ role: "user", content: query },

],

});

return response.choices[0].message.content.trim();

}6. How to Answer Open Ended Questions?

When users ask wild stuff like:

- "What should I wear today?"

- "Why do I crave pizza at 3 AM?"

- Or the existential classic: "Should I rethink my life decisions… by asking a cat bot?"

Bruhhhhhhhh Whyyyyyyyyyyyy. This is WhiskerBot, not your midnight therapist or fashion consultant.

So what do we do?

We flag these as off_topic in Step 1, and then just like in Step 4 we use the magic of openai.chat.completions.create() to handle them gracefully.

We craft a clever, friendly prompt that gently redirects the user back to reality. The bot stays polite, a little sassy (PRO TIP: keep the temperature high for maximum creativity), and totally on brand.

So the next time someone tries to trauma-dump on our purr-bot, don't worry WhiskerBot's got pawsitive boundaries.

Wanna see some clever responses?

Query: "Why do all superheroes wear underpants on the outside?"

Response: "🐱 Whiskers and whisker biscuits! Maybe superheroes just forgot laundry day? 🦸♂️ Either that or their cats told them it's the latest fashion trend. Meanwhile, I'm here to find your cat a cape—no embarrassing laundry mishaps guaranteed! 😹🦸♀️"

7. Display on Frontend

For the frontend, I used EJS templates combined with Tailwind CSS for quick and responsive styling.

Once we get the AI-generated explanation and the array of cat documents from the backend, it's as simple as passing that data to the EJS view and rendering it nicely on the page.

This way, users see a clean, friendly, and engaging summary along with the cat profiles , No raw JSON in sight!

Tailwind helps keep the UI sleek and mobile-friendly without writing tons of custom CSS.

Here's a quick example of how I passed data to the EJS template:

res.render('results', {

explanation: aiExplanation,

cats: catDocuments,

});The End (But Really, Just the Beginning!) 🐾💖

Well, that's how we built this cat-loving bot! I hope you found it fun and maybe even feel inspired to create your own.

Honestly, my love for cats was one of my biggest inspirations for creating this website. In a world where life gets messy and people can change, animals remain the purest souls, they love you unconditionally, judge silently, and still knock things off tables like they own the place.

This project is my tiny way of helping cats find warm laps and happy homes. And yes, the moment I graduate, I'm adopting one (or maybe three… 🙃). If you're ready, maybe you can too. Nothing beats a purring little weirdo becoming family.

🔥 Coming Up Next:

Ever wondered how Reddit's famous nested comments work? I'll take you behind the scenes and show you exactly how I built a Reddit-style nested comment system in this WhiskerHaven project with all the details and coding.

You won't want to miss it!

👉 Try it out here: View the nested comments in action

Thanks for reading! Now go pet a cat (or build a bot for them). Have a pawsome day! 😽

Love you guys for sticking around till the very end!! byeee byeeee 🫶 Take careeeee! 💖

Technologies Used in WhiskerBot:

Made with ❤️ by Kashish